Algorithms are seductive because they implicitly suggest that their results are free from human judgement or bias. In our project we believe that these assumptions must be challenged. Algorithms are a remarkable piece of technology, but they have the power to do great harm if not used ethically and responsibly.

A recent case in the UK is illustrative of this. The General Certificate of Education (GCE) Advanced Level (often simply called the A-levels) is the signature examination UK students will take in school; the grades achieved will determine what the student will be able to study at the post secondary level. Coveted places in medicine, dentistry, computer science, and engineering are locked behind high grade requirements. Due to the ongoing COVID-19 pandemic (which has been severe, particularly in England), the decision was made to cancel the A-levels. It seemed irresponsible and dangerous to have thousands of students collected together in enclosed spaces for hours at a time when the NHS is already struggling with existing cases. This seems to have been a wise decision.

What was less wise was the choice by the UK government to allow an algorithm to determine what grades the students would get. The system seemed reasonable from a theoretical standpoint, students’ grades would be assessed based on their previous results, meaning that the students who were working hard all along would have those efforts factored into their final grade. An unfortunate effect was that the algorithm would discount the possibility of students who would ‘rally’ at the last minute and study hard for the exams, despite not having a very good record of grades.

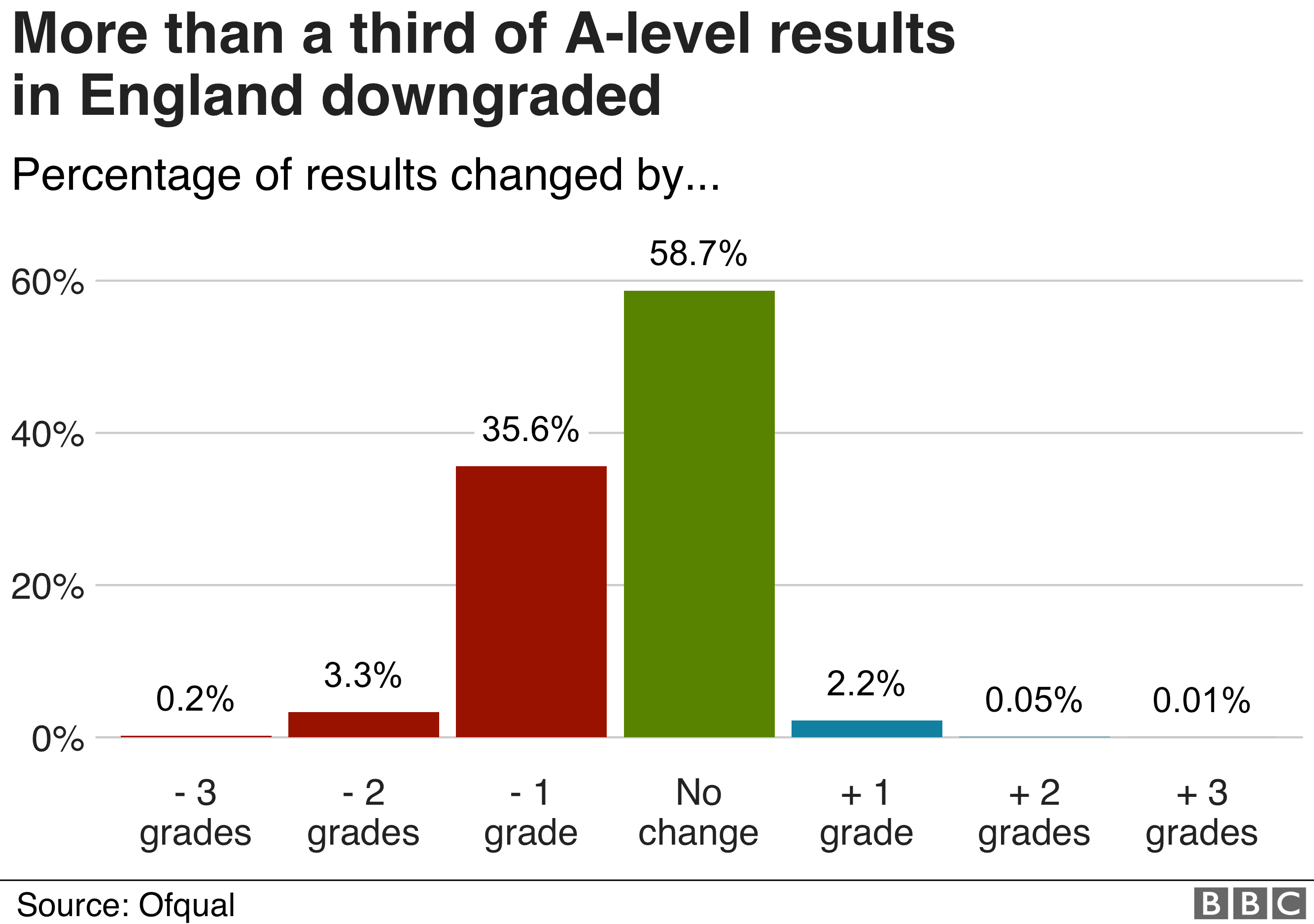

Results day, always fraught with panic and peril was nothing short of a disaster, and as the smoke cleared and the reality of the situation became obvious, hard questions were asked about how the results were calculated. In advance of receiving their results, students were given a predicted grade by their teachers, often providing an excellent indicator for the final result – but not this time. In England alone more than a third of students had grades lower than what was predicted by their teachers.

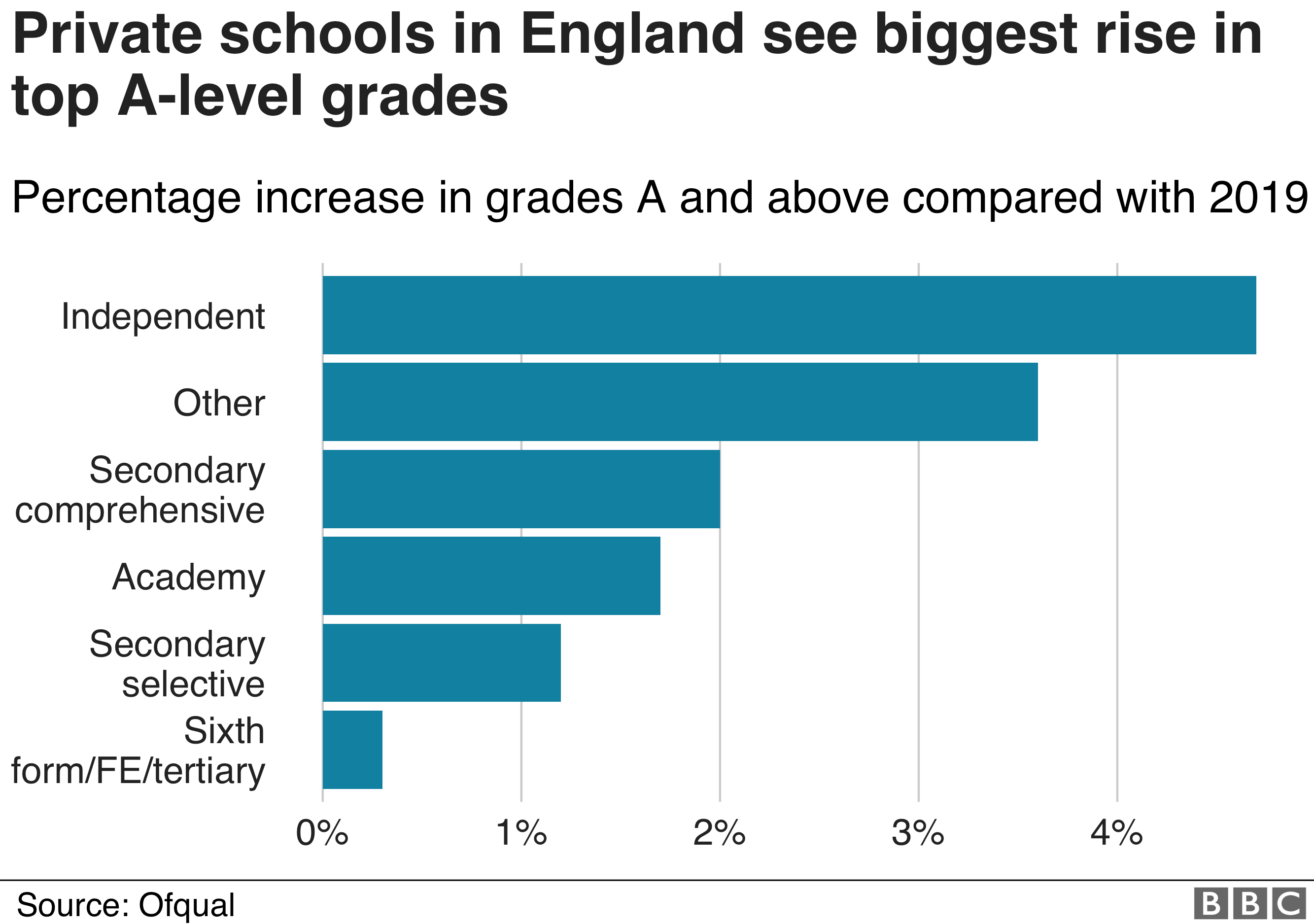

Many students complained that the results were inequitable and unfair, and people from all quarters demanded the fine grained details of how the grades were calculated and why so many students had been downgraded. It quickly became apparent that the students performance was not the only factor the algorithm took into account, significant weighting was also attached to the previous averaged results of the school the student was attending. But of course, not all schools are created equal:

This betrays the importance of ethics in this algorithmic system, the biases are endemic. The overall number of grade increases were small, but virtually all of these increases have been absorbed by private schools, which can charge upwards of £40,000 a year. We would suggest that this indicates the deep classist biases inherent within this algorithm.

Poorer students tend to go to schools that, on average, score considerably worse results than their private counterparts. These schools receive less funding, have difficulty attracting the best teachers, and are engaged in a daily battle against inequality, and poverty simply to keep the lights on. There is also a racial component , as the poorer schools have a greater number of non-white students, who may do worse due to unconscious bias by their teachers.

Additionally, while it is important not to sling mud or spend an excessive amount of time blaming individuals, it is worth remembering that algorithms are designed, built, used, and operationalized by humans. The functioning of the algorithm is not a random accident, but the result of intent (though not malicious intent). Someone, somewhere (or more likely a committee of someones) made decisions based on what they thought would be important to the functioning of the algorithm. Their choice to include the previous results of the school could only be excessively punitive to those students who attend schools that do not do as well. Even in cases where the school does well on average, one unusually poor year of results could (and did) drag down the average of many students.

After some initial prevarication government has responded by stating that they will cover the cost of all grade appeals, though the system continues to require the school to submit the appeal subject to their own internal procedures rather than having the students submit them. However with the government covering the cost of all appeals, this encourages schools to challenge cases that may be difficult to prove. The cost to the UK taxpayer is expected to be in the range of £15m. In addition to this, a ‘triple lock’ has been promised, whereby students will be allowed to sit an exam this Autumn if they believe they could have scored higher than their algorithmically predicted grades, or they can do a general appeal, or appeal on the basis of a valid ‘mock’ exam result, thus changing their grade to whatever they scored in their mocks.

Again there are issues here, mock exams are run on a school-by-school basis. Not every school runs mock exams, and it is not clear if many schools run mock exams in a manner that will be satisfactory to the Department of Education and Skills. It is however an important step in the right direction, but we would suggest that it could have been avoided had the issue of social class been taken into account before the predicted results were sent to students. But as the old adage goes: you cannot learn a thing you think you know. Let us hope the UK government have learned this lesson.